- #SOMETHING LIKE HTTRACK FOR MAC INSTALL#

- #SOMETHING LIKE HTTRACK FOR MAC UPDATE#

- #SOMETHING LIKE HTTRACK FOR MAC ARCHIVE#

- #SOMETHING LIKE HTTRACK FOR MAC MAC#

#SOMETHING LIKE HTTRACK FOR MAC ARCHIVE#

Since you are scraping the site and turning it into static html, it does make sense to make a real archive of your original site files and any attached database. But most of these issues can be resolved with a little cleanup. I found this to be a small problem with things like roll over images and dynamic hyperlinks ( especially links with ? marks in them ). It hiccups on some types of URLs depending on the underlying structure/technology. If it ran off a CMS like WordPress, you won’t be able to log in and make edits to your content. If your website had a commenting feature built in, it won’t work anymore. The main one being no more interactivity. This allows you to browse the site by going to the original URL at or any of its s like Īs with anything, there are downsides to using this technique. htaccess file and the following commands. I was able to do this pretty easily using an. It’s pretty standard practice on the web to create 301 redirects for sites you are moving to a new domain. Here’s an example of one of our scraped sites. I’m still interested in S3 as an option for this as it’s sort of the perfect hardware for the job ( is it really hardware? ) but instead I chose to spin up a micro instance on EC2 and host the sites there.

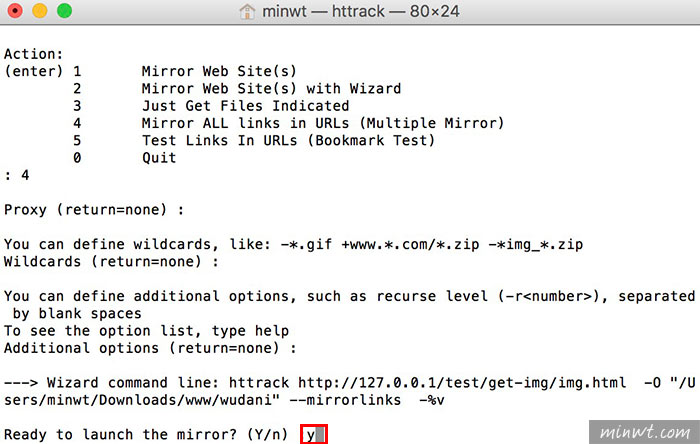

I know it’s possible to do this, but I found that many of the pages wouldn’t load correctly. Initially I thought it would be really nice to host these static sites on Amazon’s S3. So, we decided to create and place each scraped site as a sub-folder of this domain. It didn’t really make sense after years of producing these sites with different methodologies. Once you have scraped a site, it probably makes sense to move it somewhere for safe keeping. Here is httrack running in my Mac’s terminal.

#SOMETHING LIKE HTTRACK FOR MAC INSTALL#

It’s great for downloading files to your linux server ( nice way to install WordPress on a new linux box ) but it doesn’t do much else. Wget works pretty well but its not really the ideal tool for the job. Here is a sample command line call using wget.

#SOMETHING LIKE HTTRACK FOR MAC MAC#

You can also install it on your Mac using Homebrew. Wget is a pretty simple program that comes installed on most linux distributions. There are several ways of scraping a site, one of the simplest being the wget program. This is a pretty common technique that essentially creates a non-dynamic, static version of any website. One option we have been using here at Cooper-Hewitt is called web scraping.

#SOMETHING LIKE HTTRACK FOR MAC UPDATE#

This means you need to continually update the application code, and do crazy things like upgrade to MySQL 5, 6, 7 and so on. Nearly every website these days has a database running the show, so in order for these sites to work, they need to have an open connection to that database. The next issue is that in order for these sites to live on, you need to provide some level of maintenance for them. Those exhibition micro-sites, and one-off contest sites you might have produced years ago. I’m talking more about preserving old web outliers.

is a pretty good system for looking back at your main website, but its a moving target, constantly being updated with each iteration of your site. You want these legacy sites to live on in some form. First, there is a feeling of permanence on the Internet that is hard to ignore. There are a few technical problems at work here. The big question being, what to do with them. I know we have! Be it a microsite from 1998 or some fantastic ( at the time ) forum that has now been declared “dead” - it’s a problem. I would imagine that just about any organization out there will eventually amass a collection of legacy web properties.

0 kommentar(er)

0 kommentar(er)